Back in the days when I was working in the field, taking care of customers data storage setup, I run into a lot of dramatic events when an application would over saturate a volume till it goes offline. No matter what the volume data is, logs, analytics, or business analytics data, It is always painful to bring it back up and running by finding the right method of gaining access back to the server or just brining another server and attach the data volumes to it if you are lucky with shared SAN array. But what if you can prevent this from happening, not just prevention but dynamically reacting to it and extending the filesystem storage size before anything bad happens.

In this blog post I will share with you how I have built – yet another event-based architecture for those of you currently using or thinking of leveraging Pure Cloud Block Store (CBS) to provision data volumes to their apps running on AWS EC2 instance. The same concept can be applied to EBS or other block storages offerings.

Architecture

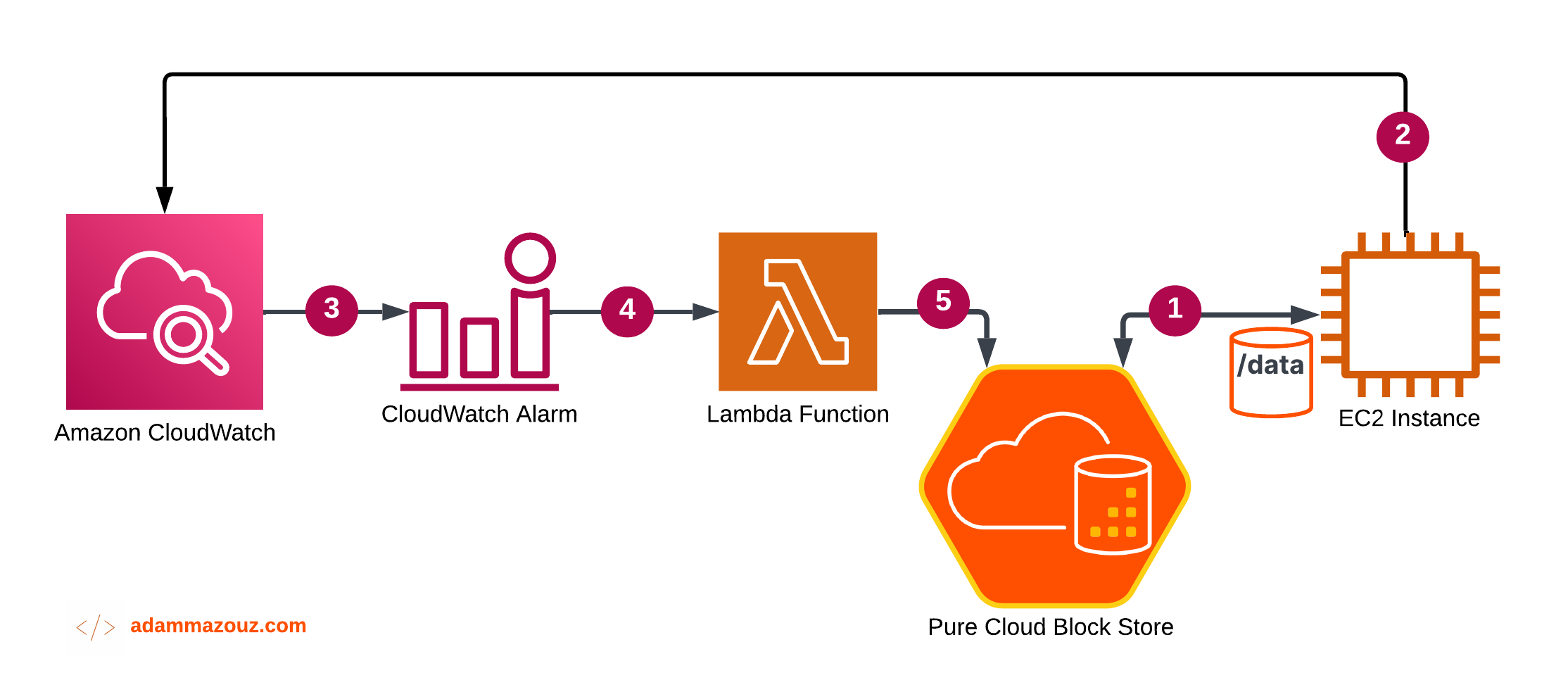

This architecture is based on four main component: Amazon CloudWatch, Lambda Function, EC2 Instance, and Pure Cloud Block Store. The figure below shows the workflow of the events:

The illustration can be broken down into five steps listed below:

- An application running on the EC2 instance using a data volume provisioned from Pure CBS with mount point

/data. - EC2 is configured to send custom metrics: disk and filesystem usage via CloudWatch agent.

- CloudWatch is using the metrics received to measure the percentage of the CBS volume usage and triggers a CloudWatch Alert upon reaching the 80% threshold.

- The CloudWatch Alert uses an Simple Notification Service (SNS) that is configured to trigger a Lambda function.

- Lambda function receives the event and execute a python script against the EC2 instance and Pure Cloud Block Store.

This was a high-level of the architecture, the remainder of the blog will go through how I configured, and put all the pieces together.

EC2 Configuration

The EC2 instance used here is instantiated with Amazon Linux 2. Therefor most of the blog will be focused on application running on Linux.

Provision Data Volume

To provision a volume from Pure CLoud Block Store to your EC2 Instance, follow the steps in the Pure CBS Implementing Guide. For the sake of this proof of concept I kept the volume size as low as 10 GB and the mounted the volume on /data directory. Just to be able to fill the volume quickly during testing.

Collect Metric CloudWatch Agent

There is two methods of configuring custom metrics collection using CloudWatch Agent, manually using the command line, or you can integrate it with System Manager (SSM). AWS have very well documented steps on how to download, attach an IAM role, add configuration guide, and start the agent. you can find it all here.

However, when you reach the step where you need to modify the CloudWatch agent configuration file and specify the metrics that you want to collect, use the json file below. It specifies the following:

- What metrics you want to collect. In our case

/datawhere I mounted the CBS volume. - How frequently to collect the metrics.

- What measurements to collect. Free, Total, Used file system capacity.

Here is an example cloudwatch-config.json file that monitors the data volume file system usage:

{

"agent": {

"metrics_collection_interval": 60,

"logfile": "/opt/aws/amazon-cloudwatch-agent/logs/amazon-cloudwatch-agent.log"

},

"metrics": {

"namespace": "CBSDataVolume",

"metrics_collected": {

"disk": {

"resources": [

"/data"

],

"measurement": [

{"name": "free", "rename": "VOL_FREE", "unit": "Gigabytes"},

{"name": "total", "rename": "VOL_TOTAL", "unit": "Gigabytes"},

{"name": "used", "rename": "VOL_USED", "unit": "Gigabytes"}

],

"ignore_file_system_types": [

"sysfs", "devtmpfs"

],

"append_dimensions": {

"customized_dimension_key_4": "customized_dimension_value_4"

}

}

},

"append_dimensions": {

"ImageId": "${aws:ImageId}",

"InstanceId": "${aws:InstanceId}",

"InstanceType": "${aws:InstanceType}"

},

"aggregation_dimensions" : [["ImageId"], ["InstanceId", "InstanceType"], ["d1"],[]],

"force_flush_interval" : 30

}

}

Save this file in the /opt/aws/amazon-cloudwatch-agent/bin/config.json directory on your EC2 instance. and start the agent.

sudo /opt/aws/amazon-cloudwatch-agent/bin/amazon-cloudwatch-agent-ctl -a fetch-config -m ec2 -s -c file:/opt/aws/amazon-cloudwatch-agent/bin/config.jsonth

Install Pure Python SDK

You will also need to install Pure Storage Python SDK on your EC2 instance. You can follow the official documentation to install the SDK.

Write Python script to Increase Volume Size

Here’s my Python script that uses the Pure Python SDK to increase the CBS volume size by 20%:

import purestorage from FlashArray

import os

import time

# Do not use this part on Prod arrays, please use certificates

import urllib3

urllib3.disable_warnings()

# Set the API token and endpoint for the Pure Storage FlashArray

api_token = os.environ['PURE_API_TOKEN']

endpoint = os.environ['PURE_ENDPOINT']

# Connect to the Pure Storage FlashArray using the API token and endpoint

client = purestorage.FlashArray(endpoint=endpoint, api_token=api_token)

# Get the iSCSI volume name and size from the FlashArray

iscsi_vol_name = "my_volume_name"

iscsi_vol_size = array.get_volume(iscsi_vol_name)['size']

# Increase the iSCSI volume size by 20%

new_iscsi_vol_size = int(iscsi_vol_size * 1.2)

client.extend_volume(iscsi_vol_name, size=new_iscsi_vol_size)

time.sleep(5)

# Specify the device name for the ext4 file system

device_name = "/dev/dm-0"

# Resize the file system to use the entire available space on the device

resize_command = ["sudo", "resize2fs", device_name]

try:

# Execute the resize command and capture the output

resize_output = subprocess.check_output(resize_command, stderr=subprocess.STDOUT)

print("File system resized successfully.")

print(resize_output.decode("utf-8"))

except subprocess.CalledProcessError as e:

print("Error resizing file system:")

print(e.output.decode("utf-8"))

# Disconnect from the Pure Storage FlashArray

client.invalidate_cookie()

Save this script as increase_volume_size.py in a directory of your choice within the EC2 instance.

Export Environment Variables

The script above uses environment variables to retrieve the Pure CBS array authentication parameters.

export PURE_ENDPOINT='10.0.1.100'

export PURE_API_TOKEN='xxxxx-xxx-xxx-xxxxx'

CBS API token can be generated using GUI, CLI, or API calls. Below is an example how to use curl to get api token using username and password.

PURE_API_TOKEN=$(curl -X POST -k -H "Content-Type: application/json" -d '{"username": '$username',"password": '$password'}' 'https://'$PURE_ENDPOINT'/api/1.19/auth/apitoken')

CloudWatch Alert Configuration

For CloudWatch Alert, we need to configure an AWS CloudWatch alert to trigger the lambda function whenever the EC2 file system usage exceeds a certain threshold.

As you can see from the screenshot below I used the vol_total and vol_used collected metrics to calculate the free percentage.

Last thing is to create an SNS topic and configure the alarm behavior.

If the calculated free volume is lower or equal to 20%, the alarm would trigger the SNS, to trigger the Lambda function.

Lambda Configuration

Next, we will need to create an AWS Lambda function that runs the Python script when triggered by the CloudWatch SNS Event.

The function is created with python 3.9 runtime, and the timeout is increased for 10 second.

Here’s an example Python script for the Lambda function:

import boto3

import logging

import json

#Set logging level

logger = logging.getLogger()

logger.setLevel(logging.INFO)

def lambda_handler(event, context):

# Get the SNS message from the event

sns_message = event['Records'][0]['Sns']['Message']

# Create an SSM client

ssm_client = boto3.client('ssm')

# Get the EC2 instance ID from the SNS message

instance_id = sns_message['instance_id']

response = ssm_client.send_command(

InstanceIds=[instance_id],

DocumentName='AWS-RunShellScript',

Parameters={

'commands': [

# Run the Python script to increase the volume size

'python3 /home/ec2-user/script/increase_cbs_volume.py'

]

}

)

# Get the command ID from the response

command_id = response['Command']['CommandId']

print(command_id, instance_id)

# Wait for the command to complete and get the output

output = ''

while True:

ssm_response = ssm_client.get_command_invocation(

CommandId=command_id,

InstanceId=instance_id

)

if ssm_response['Status'] == 'InProgress':

continue

elif ssm_response['Status'] == 'Success':

output = ssm_response['StandardOutputContent']

break

else:

output = ssm_response['StandardErrorContent']

break

return output

This should be it, let’s test it all now 🤞.

Testing

Since the attached data volume was 10 GB it would be easy to fill with dd command. The aim is to get to the alarm state where the free capacity is lower than 20%.

sudo dd if=/dev/zero of=fill_volume bs=$((1024*1024)) count=$((9*1024))

First thing I will be getting a notification email since I have subscripted to SNS topic.

If I checked the CloudWatch I would see the following. In Alarm.

Few second after, the alarm will go back to OK state, and if I login to the EC2 instance and run df -h. I will find data volume got extended.

Closing thoughts

Dealing with storage expansion is always a pain. adopting such workflow would make it efficient to react to such events dynamically specially if your storage system supports programmatic calls and configuration.

Overall this blog has automated the workflow of volume size increase of one Application running on one EC2 instance. I will be investigating and putting more time on how to scale this using AWS System Manager, and also brining the volume increase script logic into Lambda function other than executing it from the application host itself. Hope you enjoyed the read and found it helpful, till the next one :)

comments powered by Disqus